We are often asked about our advise in the realms of high refresh rate monitors which the industry has come awash with over the last couple of years. With newer and more powerful graphics cards, CPU's and the price of high refresh rate monitors dropping rapidly, and lots of monitor manufacturer marketing its become a hot topic as of late.

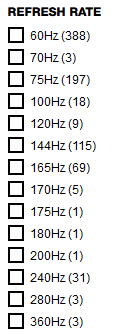

Once upon a time we all used 60Hz monitors and we were all very happy with displaying up to 60FPS however with the ever-changing market and debates about frame rate giving players advantages in competitive gaming scenarios the monitor world started top evolve. In theory a monitor can be any refresh rate however as monitor panels are made by only a few manufacturers (irreverent of the brand) and the fact its pointless making one panel that's say 90Hz and say 95Hz as there is little between them. Today, its common to see 60Hz, 75Hz, 120Hz, 144Hz, 165Hz, 240Hz and 360Hz, there are others, but they are of negligible quantity, here is a sample split from a monitor retailer site;

As you can see, 60Hz still completely dominates the market and will continue to do so as 60Hz is still buttery smooth and to many eyes, may not see the advantage or want more than what is already considered smooth. Many PC gamers are also making the jump from consoles where they have been used to a lowly 24/30FPS so 60Hz/FPS is already big step up. That said, everything north of 60Hz make up approximately half of the market and we cant see that number ever doing anything other than claiming more share of the market as the technology progresses and prices come down.

Now before I continue, to help aid understand this article, if its not already clear, 1Hz is 1FPS. By its very definition;

is short for Frames Per Second

From both we have the same unit of time, second, thus the 1:1 direct link.

It is also important to remember a monitor will display up to a certain frequency whilst a computer will output a given FPS. For example say you have a 144Hz screen and the PC is outputting 100FPS then the monitor will display 100FPS irrelevant of its frequency. If a PC is outputting 180FPS then the 144Hz monitor will still only display 144Hz. In an ideal world a PC will output 144Hz to a 144Hz screen all the time (and there are ways of doing so but that is another story) however that's not how computers and games work as a computer will output the best frame rate it can depending on the settings and how demanding each part of a game is and thus frame rate does vary by a fair bit even in the same game.

This is why FPS's numbers are generally quoted as "average" FPS and the result is a single number however the reality is a game will run between say 80 and 120FPS depending on the complexity of the point in game the PC is tasked with. Average FPS looks to simplify this and for the most part it does most of the time as a game will spend 80% of its time being very close to that average, its only a very small percent (1-2%) of the time where a game will be outputting the extreme ranges of that scale which is why many look at an average. How much a game deviates from that average is entirely game dependant and for the most part that deviation is small but there are titles out there where this deviation is significant. When dealing with FPS you should always look into this deviation however for the purpose of this guide, we are only going to deal with "average FPS" so that not to make it too complex.

FPS Science!

Most game developers and publishers will spend most of their time ensuring their title runs well at an acceptable frame rate, generally speaking that's them aiming for 60FPS, some esports titles will aim for more and optimize graphics levels accordingly but for the most part they need to hit 60+FPS as that is what most monitor refresh rates are geared towards and is what many consider perfectly smooth. However, as games get older, easier to run, further optimized or just simply made correctly in the first place they will go comfortably beyond that unofficial accepted minimum level and users will want to chase higher numbers.

The main thing to remember here is that FPS is pretty much directly proportionate to itself. 120FPS is essentially twice as hard to achieve as 60FPS, meaning you need twice the amount of raw graphical processing power to achieve those numbers. 240FPS requires 4 times the amount of graphical processing power as 60FPS. In the graphics card world this is a fairly big ask as different models of GPU's tend to be only a dozen or couple of dozen percent faster than the model below rather than 2 or 4 times more powerful. With the way the market is right now 120/144/165Hz is relatively achievable however when you start hitting 240FPS upwards its quite a challenge, even at 1080P resolution.

Check out a couple of estimated FPS of the following graphics cards in certain games;

Fortnite at 1080P on "ultra" settings;

| GPU | Average FPS |

| GTX 1660 SUPER | 92 |

| RTX 2060 | 91 |

| RTX 3060 | 120 |

| RTX 3070 | 133 |

| RTX 3080 | 151 |

COD: MW Engine (Warzone) at 1080P on "ultra" settings;

| GPU | Average FPS |

| GTX 1660 SUPER | 115 |

| RTX 2060 | 117 |

| RTX 3060 | 132 |

| RTX 3070 | 175 |

| RTX 3080 | 210 |

Valorant at 1080P on "ultra" Settings;

| GPU | Average FPS |

| GTX 1660 SUPER | 184 |

| RTX 2060 | 189 |

| RTX 3060 | 202 |

| RTX 3070 | 277 |

| RTX 3080 | 341 |

As you can see, both Fortnite and Warzone, 2 very big hitting titles will never hit the heights of 240FPS even with a monster RTX 3080 graphics card. Its only specific titles such as the likes of Valorant when you can hope to achieve the heights of 240FPS gameplay and even then you need a very powerful RTX 3070 or better to do so. I suppose what we are trying to say here, is that 240Hz Gaming and above is difficult to do right now and to actually be able to do so you need to sacrifice one of the following;

1 - Your wallet

2 - Game settings

3 - FPS

Given high FPS is the target, you either need to empty your wallet and invest in the very best hardware (which may not even hit the heights you are aiming for anyway) or turn settings down and the latter is generally what many FPS hungry gamers do. Some games now even have "competitive" game presets built in or community recommended settings to chase down every last frame. Lets take a look at Fortnite and Warzone again but with settings dialled down;

Fortnite at 1080P on "medium" settings;

| GPU | Average FPS |

| GTX 1660 SUPER | 197 |

| RTX 2060 | 190 |

| RTX 3060 | 228 |

| RTX 3070 | 283 |

| RTX 3080 | 320 |

COD: MW Engine (Warzone) at 1080P on "medium" settings;

| GPU | Average FPS |

| GTX 1660 SUPER | 230 |

| RTX 2060 | 228 |

| RTX 3060 | 240 |

| RTX 3070 | 339 |

| RTX 3080 | 406 |

As you can see these numbers are much more likely to appease the frame rate chasers but you are doing so at a reduced immersive experience by reducing effects and graphical fidelity. This seems counter intuitive to us, games are made in a way developers want you to experience and actively turning off effects and dialling down the settings means you will be missing out on things however if you are looking to get those frame rates up, this is sometimes the only way in certain titles.

Resolution

Before we had high refresh rate, we had higher resolutions, in short this was gamers chasing 1440P or 4K resolutions however the science behind this has very much so evolved and is different. To understand how resolution plays a part take a look at the table below which shows how many pixels each screen has for each resolution;

| Resolution | Pixels |

| 1080P (1920x1080) | 2,073,600 |

| 1440P (2560x1440) | 3,686,400 |

| 4K (3840x2160) | 8,294,400 |

They are calculated by simply multiplying the amount of horizontal pixels against the amount of vertical pixels. Now each pixel needs an input from a GPU and in theory controlling 2 pixels is twice the amount of work as controlling a single pixel and thus need twice the amount of raw GPU power to cover twice the amount of resolution. Natively, this is effectively still true however as time has progressed what was once upon a time either non existent or a complete pain to setup game now come with scaling options built in that are almost arguable a default choice when chasing high resolutions. How good scaling is depends on the title and resolution but the short of it is that it allows you run a game at say twice the resolution/number of pixels without the need for exactly twice the amount of raw processing power.

To demonstrate this, lets go back to COD: MW Engine (Warzone) at 1080P, 1440P & 4K on "ultra" settings

| GPU | Average FPS 1080P | Average FPS 1440P | Average FPS 4K |

| GTX 1660 SUPER | 115 | 83 | 50 |

| RTX 2060 | 117 | 85 | 51 |

| RTX 3060 | 132 | 97 | 59 |

| RTX 3070 | 175 | 125 | 74 |

| RTX 3080 | 210 | 151 | 90 |

The Warzone engine is relatively "scaleable" in other words it scales fairly well and is good to use in this example. Take a look at the results, lets home in the RTX 3080 numbers, the 4K result is just under half that of 1080P where in reality you would expect this number to be around a quarter as there are 4 times the amount of pixels that need controlling. At the same time, give or take a few pixels you would also expect the 1440P benchmark to be half of the 1080P as there are nearly twice the amount of pixels to control, yet the result is actually around 3/4 of the 1080P result.

Delve into the RTX 3080 numbers fully, and the return is 1 Frame to 9,874 pixels at 1080P whilst at 1440P its 1 frame to 24,413 and at 4K 1 Frame is 92,160 pixels. 1440P is a little difficult to work with as its its not exactly twice of half the resolution however 1080P & 4K are directly comparable as an exact resolution 1:4 scale yet the performance scale is closer to 1:9!

The main takeaway here is whilst FPS is directly 1:1 scalable, resolution is not directly 1:1 scalable. It is far easier and thus less demanding on hardware requirements to double the resolution than it is double the FPS. Of course, results like this vary from title to title, some games simply do not scale very well and thus will be closer to that 1:1 ratio in order to up the resolution but for the most part, relatively well optimized games will yield much better results.